Over the past weekend, we released major new versions of our entire product line: Analytic Solver® V2023 for Excel (Desktop and Cloud versions), our cloud platform RASON® V2023, and our developer toolkit Solver SDK® V2023 (which now includes XLMiner® SDK). There are enhancements throughout all these products (see especially new Model Management features in RASON), but the "headline" new capability is automated risk analysis of machine learning models -- which we'll seek in explain in this post. Since we believe there's a significant innovation here -- a new "best practice" for developing and deploying machine learning models -- and since we can only file "invention claims" prior to publication of the enhancement or any description -- we've filed a provisional patent application prior to this release.

Risk analysis changes the focus from how accurately a ML model will predict a single new case, to how it will perform in aggregate over thousands or millions of new cases, what the business consequences will be, and the (quantified) risk that this will be different than expected from the ML model’s training and validation.

How and Why Machine Learning has Lacked Risk Analysis

For a decade, data science and machine learning (DSML) tools – including ours – have offered facilities for ‘training’ a model on one set of data, ‘validating’ its performance on another set of data, and ‘testing’ it versus other ML models on a third set of data. But this is not risk analysis: based on known data, it doesn’t assess the risk that the ML model will perform differently on new data when put into production use. While it’s common to assess a ML model’s performance in use, and move to re-train the model if its performance is unexpectedly poor, by that time those risks have occurred, often with adverse financial consequences. Quantification of such risks “ahead of time” has been missing in practice.

There are many reasons for this state of affairs: Data scientists with expertise in ML methods often are not trained in risk analysis; they think of “features” and even predicted output values as data, not as “random variables” with sampled instances. Even if known, conventional risk analysis methods are expensive and time-consuming to apply to machine learning: ML data sets include many (sometimes hundreds) of features, with limited “provenance” of the data’s origins. There are hundreds of classical probability distributions that could be ‘candidates’ to fit each feature. Only some of the features are typically found, after ML model training, to have predictive value; many are found to be correlated with other features and hence ‘redundant’. And in typical projects, a great many ML models are built.

How Analytic Solver, RASON and Solver SDK Perform Automated Risk Analysis

Unlike most other DSML software, Analytic Solver, RASON and Solver SDK include powerful algorithms for risk analysis in the same package: Probability distribution fitting, correlation fitting, stratified sample generation, and Monte Carlo analysis. But asking business analysts – let alone data scientists – to “quickly master risk analysis” is asking too much. So we've invented ways to automate the entire risk analysis process. Using this new capability is as simple as checking one extra box in a dialog in Analytic Solver, adding a clause "simulation": { } in RASON, or adding a list of "Learner" objects to an "Estimator" object in Solver SDK – and the risk analysis typically adds just seconds to a minute to the existing process of training, validating, and testing a ML model. Here's an example in Analytic Solver: The user selects Data in the left-hand dialog, selects Parameters if desired for Logistic Regression (defaults are fine), then simply checks the box "Simulate Response Prediction" in the right-hand dialog -- everything else here can be a default selection, though we've added a sample size and a calculation of business consequences as extra options:

Behind the scenes, for each feature, our software tests an entire family of probability distributions – drawing on our first-mover support for the new Metalog family of distributions, created by Dr. Tom Keelin; optimizes all the parameters of each distribution; detects and models correlations among features, using rank order and copula methods; performs synthetic data generation, using Monte Carlo methods for stratified sampling and correlation; computes the ML model’s predictions as well as user-specified financial consequences, for each simulated case; and importantly, assesses and quantifies the differences in performance of the ML model on this simulated data versus the training, validation and test data.

In Analytic Solver for Excel, the user sees results of the risk analysis in automatically-generated charts, statistics, and risk measures – drawing from our existing Monte Carlo simulation UI features, based on user feedback over many years. RASON and Solver SDK aren't chart-drawing tools themselves, but both products immediately make available all the data needed to draw charts, and all the statistics and risk measures.

Synthetic Data Generation as a Side Benefit

Synthetic Data Generation (SDG) has become topical in machine learning in recent years, with a number of companies founded just to supply software and services around this technology. SDG is used when there isn’t enough original data, or when use of the original data is restricted by law or regulation. But until now, SDG has simply been used to better train ML models.

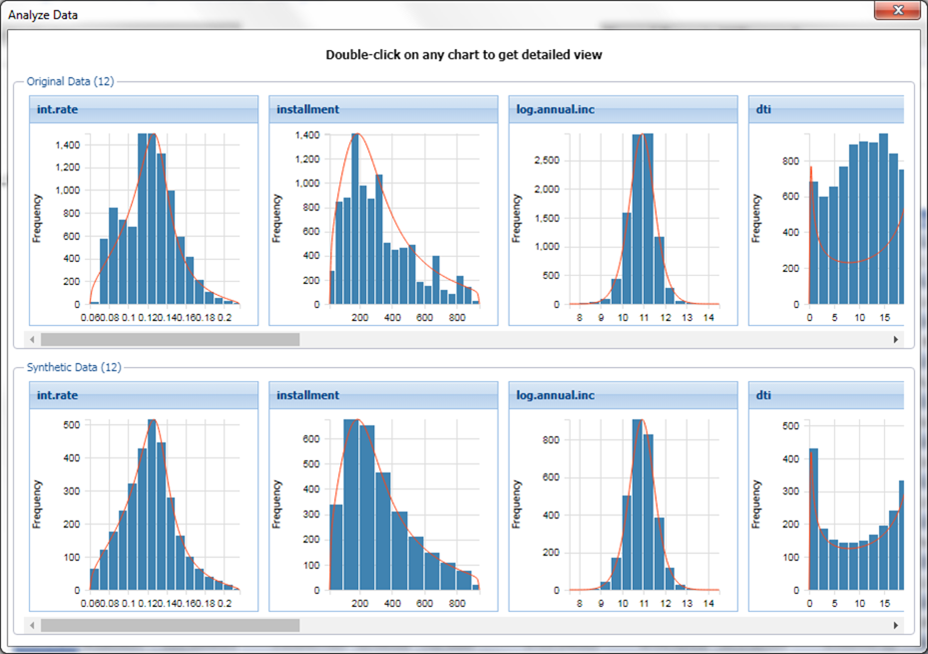

Analytic Solver, RASON and Solver SDK V2023 include a powerful, general-purpose, easy to use Synthetic Data Generation facility -- and you can use it, if you like, to better train a ML model. Unlike some special-purpose SDG offerings, this facility can accurately model the behavior of nearly any combination of features with continuous values. Below is a display from Analytic Solver in Excel, showing comparative frequency distributions of training data versus synthetic data. But our software also uses synthetic data in an entirely new way, to analyze the risk that a ML model will yield unexpected results “large enough to matter” when deployed for production use.

What Risk Analysis of Machine Learning Models Can Mean in Practice

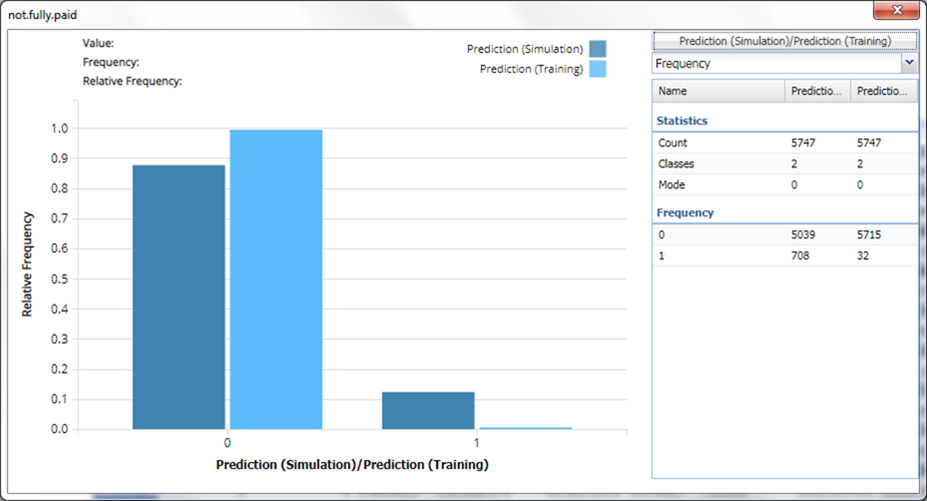

In our patent disclosure, we show through examples what this can mean, for a popular real-world loan dataset from Kaggle: A first try using a Classification Tree, with pruning of the tree based on the validation set, yields a very poor model that won't classify any future cases as likely to default. A second try using Logistic Regression yields a better model that initially "passes muster", but the risk analysis shows that this model will likely perform differently on future cases, "over-classifying" a significant number of low-risk applicants as likely to default:

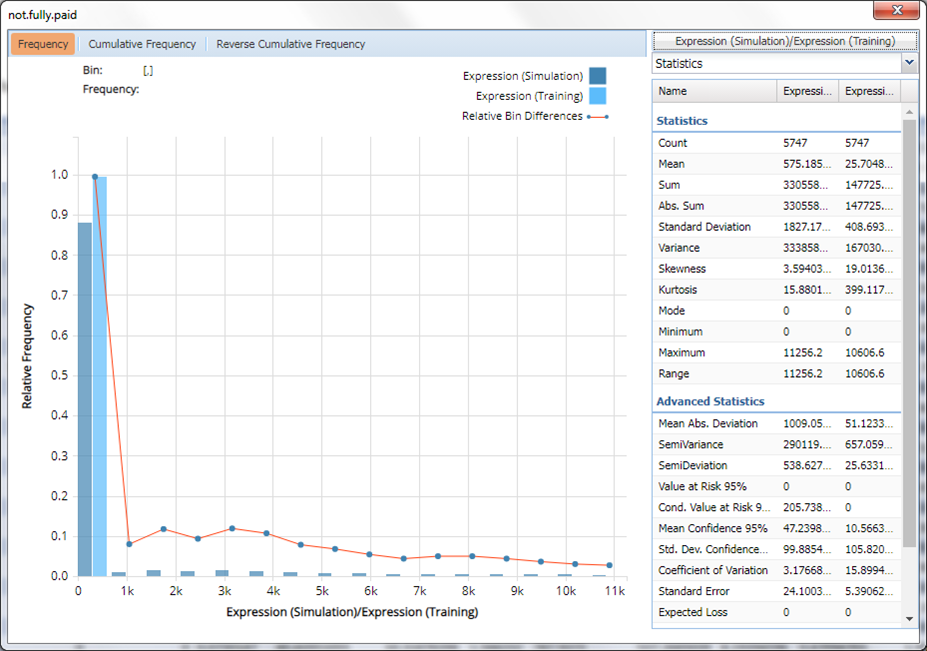

Even more interesting to a financial manager is the distribution of possible loan losses on approved loans, and how this differs between the training data and the simulated future data:

A third try using an Ensemble of Classification Trees combined via Bagging yields a model that does perform acceptably well on simulated future data. Details are omitted here, but the important point is that the risk analysis alerted us to the possibility of unexpected model performance "in production" -- before it happened -- and motivated us to keep trying until we had a model that we could be confident would perform well in production use.

Works with Already-Available ‘Augmented Machine Learning’

Our previous releases Analytic Solver V2021.5 and RASON and Solver SDK V2022 featured some “augmented machine learning” features found only in other advanced machine learning tools. The user simply supplies data, and selects a menu option “Find Best Model”: Our software will automatically test and fit parameters for multiple types of machine learning models – classification and regression trees, neural networks, linear and logistic regression, discriminant analysis, naïve Bayes, k-nearest neighbors and more – then validate and compare them according to user-chosen criteria, and deliver the model that best fits the data. Analytic Solver V2021.5 also featured enhancements that enable multi-stage “data science workflows” including machine learning models built and tested in Excel, to be deployed automatically to our RASON® cloud platform for decision intelligence.

But now that it’s possible and even easy, users will want to assess the risk of a ML model before it is deployed. With a few mouse clicks, Analytic Solver V2023’s automated risk analysis can be applied to the model delivered by “Find Best Model”. When the analyst or decision-maker is satisfied, with a few more mouse clicks, the model can be deployed as a cloud service with an easy-to-use REST API. Users who prefer a high-level modeling language over Excel can use "Find Best Model" in RASON, while developers who just want to write code can do the same thing in C++, C#, Java, R or Python using Solver SDK.

We believe that risk analysis of the kind we've just described will become a new "best practice", part of the process of developing and deploying machine learning models. But even "once the light bulb goes on" and the value of such risk analysis is recognized, it has to be easy enough, and quick enough to do that it's practical to include in time-pressured AI and machine learning projects. In Analytic Solver V2023, RASON V2023 and Solver SDK V2023, we believe we've met that bar in both speed and ease of use.